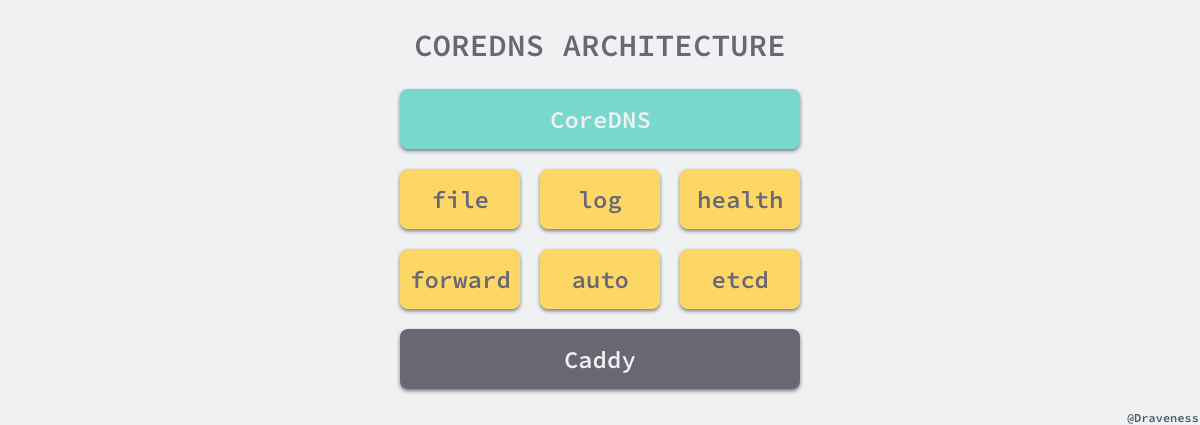

架构

搭建

正常使用kubeadm等工具部署的集群中默认都已经部署有coredns,以下为手动部署的流程:

- 创建一个serviceaccount用来做鉴权用

- 创建clusterrole对象system:coredns

- 绑定serviceaccount和clusterrole

- 创建coredns的配置configmap,在后面创建deployment时引用。这里需要注意根据需要修改forward和pods属性

- 创建coredns的deployment,容器引用对应的configmap,限制资源、暴露端口、配置调度策略等到

- 创建service,设置podselector和端口

coredns.yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

cat coredns.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. Default is 1.

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

containers:

- name: coredns

image: coredns/coredns:1.8.0

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: CLUSTER_DNS_IP

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

创建后:

1

2

3

4

5

6

7

8

9

10

11

12

[root@none-nested-master hujin]# kubectl get service -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

k8s-keystone-auth-service ClusterIP 10.233.40.69 <none> 8443/TCP 4d20h

kube-dns ClusterIP 10.233.0.110 <none> 53/UDP,53/TCP 7d2h

[root@none-nested-master hujin]# kubectl get deploy -n kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

coredns 1/1 1 1 7d2h

k8s-keystone-auth 1/1 1 1 4d18h

[root@none-nested-master hujin]# kubectl get deploy -n kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

coredns 1/1 1 1 7d2h

k8s-keystone-auth 1/1 1 1 4d18h

修改集群cluster dns配置,这里如果集群默认部署coredns,会设置成pod的ip,修改后,新建的pod中/etc/resolv.conf文件会获取配置后的ip

1

2

3

4

5

vim /etc/kubernetes/kubelet-config.yaml

clusterDNS:

- 10.233.0.110

service kubelet restart

使用

通过使用busybox中的nslookup命令访问域名

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

cat busybox.yaml

apiVersion: v1

kind: Pod

metadata:

name: busybox2

namespace: default

spec:

containers:

- image: aarch64/busybox

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

name: busybox2

restartPolicy: Always

访问service

格式:[svc-name].[namespace].svc.[self-defined cluster domain]

1

2

3

4

5

6

7

8

9

10

11

# 查询域名:

/ # nslookup my-apache.default.svc.cluster.local

Server: 10.233.0.110

Address 1: 10.233.0.110 kube-dns.kube-system.svc.cluster.local

Name: my-apache.default.svc.cluster.local

Address 1: 10.233.4.36 my-apache.default.svc.cluster.local

# 通过域名访问服务

sh-4.2# curl my-apache.default.svc.cluster.local

<html><body><h1>It works!</h1></body></html>

访问pod

格式:[pod-ip].[namespace].pod.[self-defined cluster domain]

1

2

3

4

5

6

7

8

9

10

11

# 查询域名

/ # nslookup 10-233-127-250.default.pod.cluster.local

Server: 10.233.0.110

Address 1: 10.233.0.110 kube-dns.kube-system.svc.cluster.local

Name: 10-233-127-250.default.pod.cluster.local

Address 1: 10.233.127.250 10-233-127-250.my-apache.default.svc.cluster.local

# 通过域名访问服务

sh-4.2# curl 10-233-127-250.default.pod.cluster.local

<html><body><h1>It works!</h1></body></html>

自定义域名

一般情况下我们使用coredns是在k8s中的,但是实际coredns提供了很多插件,这里我们可以通过hosts插件实现自定义域名的功能,

hosts插件的配置参数参考: hosts [FILE [ZONES…]] { [INLINE] ttl SECONDS no_reverse reload DURATION fallthrough [ZONES…] }

FILE:需要读取与解析的hosts文件;如果省略,默认取值”/etc/hosts”;每5s扫描一次hosts文件的变更。

ZONES:如果为空,取配置块中的zone。

INLINE:宿主机hosts文件在corefile中的内联;在”fallthrough”之前的所有”INLINE”都可视为hosts文件的附加内容,hosts文件中相同条目将被覆盖,以”INLINE”为准。

fallthrough:如果zone匹配且无法生成记录,将请求传递给下一个插件;如果省略,对所有zones有效,如果列出特定zone,则只有列出的zone受到影响。

修改coredns的configmap,这里修改完成后需要等待短暂时间重新加载配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

kubectl edit configmaps -n kube-system coredns

apiVersion: v1

data:

Corefile: |

.:53 {

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

hosts /etc/hosts {

10.244.0.4 nginx.me.test

fallthrough

}

}

[root@hujin-test ~]# kubectl exec -it my-busybox-6dffd8765-pkcl7 -- sh

/ # ping nginx.me.test

PING nginx.me.test (10.244.0.4): 56 data bytes

64 bytes from 10.244.0.4: seq=0 ttl=63 time=0.281 ms

64 bytes from 10.244.0.4: seq=1 ttl=63 time=0.254 ms

64 bytes from 10.244.0.4: seq=2 ttl=63 time=0.195 ms

64 bytes from 10.244.0.4: seq=3 ttl=63 time=0.166 ms

参考

- https://github.com/coredns/deployment/tree/master/kubernetes

- https://coredns.io/plugins/hosts/